TLDR;

The documented minimum permissions required for using the synapsesql connector for spark to read or write data from Synapse SQL Pools is giving high privileges to spark users even though the required operation is only read. In this article, I’ll provide a workaround

Notebooks in Synapse

Azure Synapse Analytics’ most appealing feature at first glance is the Synapse Studio. One unified UX across data stores, notebooks and pipelines. Notebook experience is appreciated the most among folks who read a load of data that takes minutes or hours to load then do operations on it whether in data engineering, feature engineering or ML training. The ability to divide your code into smaller chunks that you control which to execute when is a powerful productivity tool.

Reading and writing Synapse SQL from Synapse Spark

Synapse spark has synapsesql connector that allows reading and writing from/to Synapse SQL to/from dataframe which is a cornerstone in the integration story between Synapse SQL & Synapse spark.

How the synapsesql works

For the sake of this article, I’ll go through the reading scenario only. Let’s say you are executing a cell that contains this code

%%spark

val df_read=spark.read.synapsesql("retaildw.loading.fact_Sale").limit(100000)

display(df_read)

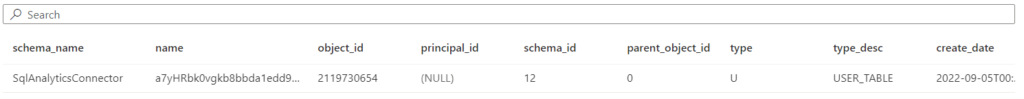

synapsesql connector reads by creating an external table in a schema called SqlAnalyticsConnector and write to this external table the first 100000 records in the fact_sales table

To this this in action, consider executing this query while running the above cell

select s.name as schema_name,xt.* from sys.external_tables xt

join sys.schemas s on xt.schema_id=s.schema_id

Where s.name='SqlAnalyticsConnector'

order by create_date desc

The result would be something like this

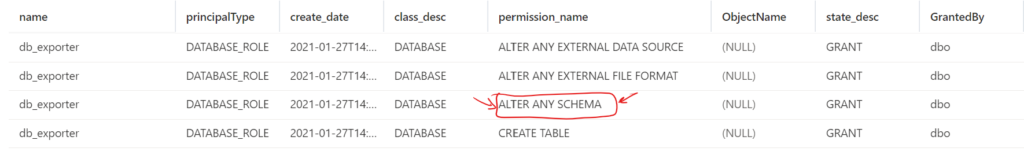

The required permissions

According to the docs the synapsesql connector requires the SQL login mapped to the user running spark to be a member of the db_exporter role. Regardless of the operation is reading or writing. This imposes a security risk because the permissions granted to the db_exporter are

As you see, the problematic permission is Alter Any Schema which allows the login to drop any table in any schema in the database.

For the security-conscious organizations, this is not a desired state. When this problem was presented to me from one of my customers whom I really thank them for always striving for the best security practices, I had to do some hacking 😉

The Hack

Every time the spark data engineer use synapsesql to read from the SQL Pool

1) If not exist, a new schema called SqlAnalyticsConnector created.

2) a new external table created with random name, this is a permanent table not temp table as it’s known in SQL Server and SQL Pools however the table is deleted after finishing the reading.

3) This external table points to a folder in the file system used as the primary file system of the Synapse workspace.

4) Data is read from the original table into this new external table.

5) From the data lake the data is loaded into a dataframe.

6) External table and folder structure in the primary file system are unallocated.

So if we pre-create a schema called SqlAnalyticsConnector and give the spark user permissions over this schema then that should solve it. The script should be like this.

/*

CREATE custom role

*/

CREATE ROLE db_exporter_limited AUTHORIZATION dbo;

/*

Grant permissions to custom roles

*/

GRANT ALTER ANY EXTERNAL DATA SOURCE TO db_exporter_limited;

GRANT ALTER ANY EXTERNAL FILE FORMAT TO db_exporter_limited;

GRANT CREATE TABLE TO db_exporter_limited

GRANT ALTER ON SCHEMA::SqlAnalyticsConnector TO db_exporter_limited

Notes

The only difference from the builtin db_exporter role is the Alter permission is only granted on the SqlAnalyticsConnector schema not all the schemas. The CREATE TABLE permission will only affect the SqlAnalyticsConnector schema.

You still need to give permissions to the spark user to read from the table either by explicit permissions or membership of a role like db_datareader

This doesn’t negate the fact that the user needs access to the data lake as well, as per the documentation, the user needs write access to the workspace file system.