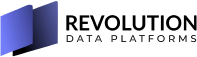

This is an updated version of my article at Medium.com originally written on December 2019 as some changes happened since then Azure Databricks workspace is a code authoring and collaboration workspace that can have one or more Apache Spark clusters. So as a prerequisites to create the cluster, there has to be a Virtual Network (VNET) to have the machines attached to itd. That’s why in the managed resource group, if you don’t choose to use your own VNET, there will be a VNET created for you.

Many organizations have restrictions about the VNET creation and prefer to integrate the Databricks clusters into their network infrastructure, that’s why Azure Databricks now supports VNET injection. VNET injection enable you to use an existing VNET for hosting the Databricks clusters.

The docs are listing the benefits & the requirements of these VNETs so I won’t list all of them here however the most important ones

- Have to be in the same subscription

- Address space: A CIDR block between /16 — /24 for the virtual network and a CIDR block up to /26 for the private and public subnets

- Two subnet per workspace

- Can’t use the same subnet by two workspaces –> That means every time there’s a new workspace, there’s quite a checklist to go through which makes the idea of hosting multiple projects/departments on the same workspace a favorable idea but that’s the topic of another article.

But first, what’s deployed inside the customer’s VNET? is Azure Databricks entirely deployed there? No, the control plane and web UI are always deployed in a Microsoft-managed subscription. That’s why the Azure Databrisk UI is always https://< Azure Region >.azuredatabricks.net

During workspace deployment, there’s no clusters created yet. So during deployment, Databricks would insure that the minimum requirements for the clusters to be successfully deployed are met.

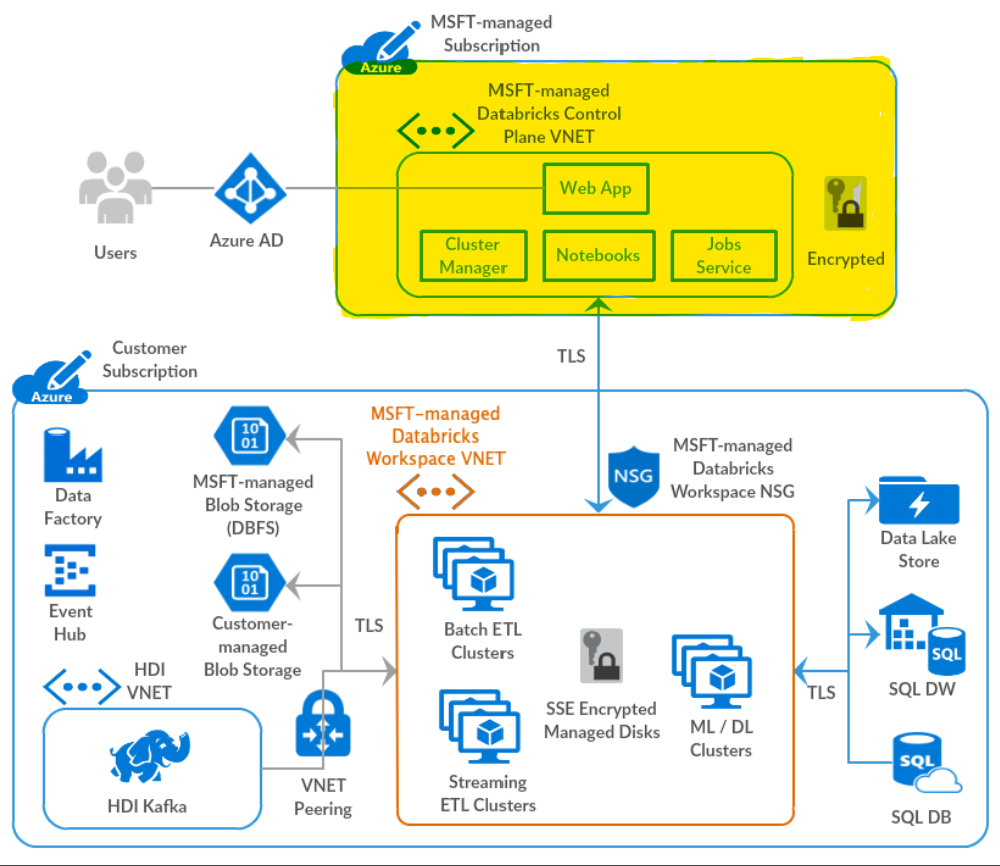

What happens during deployment time?

- Delegation to Microsoft.Databricks/workspaces is made on each subnet. This delegation will be used by the service to create a Network Intent Policy. Azure Network Intent Policy is a feature used by some Microsoft first party providers like SQL Managed Instance. It’s not available for customers’ use as there’s no public docs for it. In the case of Databricks, it’s used to maintain the NSG rules added by the workspace creation. If you try to delete one of the rules, you will get an error message because of the Intent Policy.

- Check if there’s already an NSG attached to the subnet. If not then the creation of the workspace will fail. In the portal experience, the ARM template generated by the portal is avoiding the failure by creating an NSG and attach it. It will create only one NSG for both private & public subnets. The rules are identical anyways even if you provided two NSGs, you will find the same set of rules.

- If there’s NSG attached, new rules will be added and protected by the Intent Policy so it can’t be changed or deleted.

I’ve created a sample ARM template on github that deploys Databricks workspace with VNET integration but you have to setup the delegation and attache NSGs to the subnet before you deploy the template

Why having two subnets? Each machine in the Databricks cluster has two virtual network cards (NIC), one with private IP only attached to the private subnet and one with both private & public IPs attached to public subnets. The public IPs used to communicate between your cluster and the control plane of Azure Databricks plus any other data sources that might reside outside your Vnet. All the inter-cluster communication happens on the Are the subnets exclusively used by the workspace clusters only? No, you can use subnets that has NICs already attached to them. But you can’t have two different workspaces on the same subnet

Integrating Azure Databricks workspaces with firewalls

On of the main reasons to have Azure Databricks workspaces integrated with VNET is to utilize your existing firewalls. The workflow to do that is

- Create and assign Route Tables that will force the traffic from Azure Databricks to be filtered by the firewall first.

- In the firewall you add rules to allow the traffic needed for your workspace

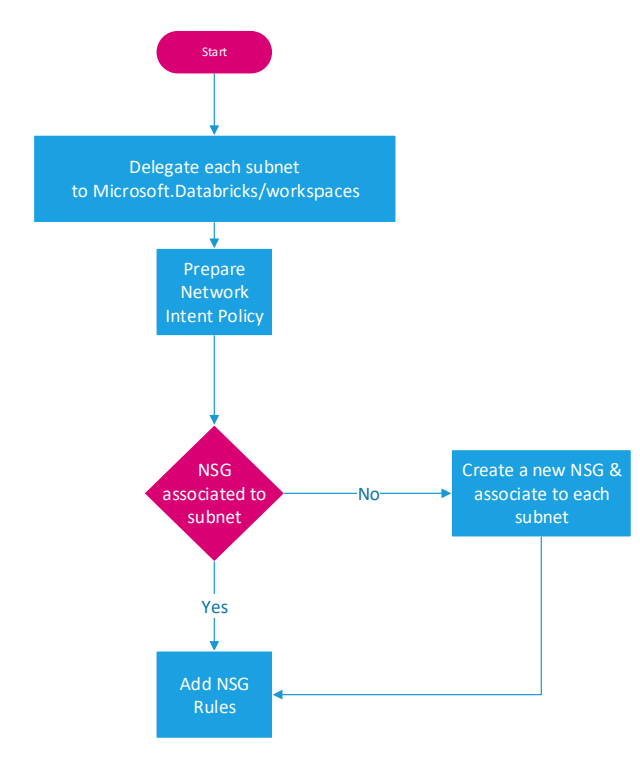

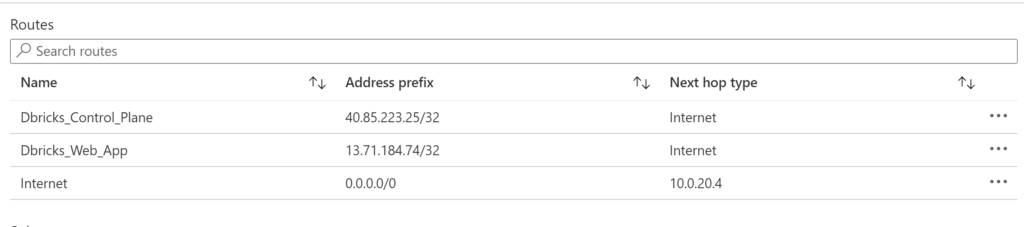

The traffic coming to the public IPs of the workspace clusters, doesn’t pass on the firwall and if your routing route all 0.0.0.0/0 to the firewall that means the firewall will only see the return of the traffic and for any stateful firewall like Azure Firewall, it will drop the traffic. You have to add exception of the routing for the control plane. The screenshot below shows this problem. For a complete list of the IPs per region, refer to the docs

Because of how stateful firewalls work , the routing table should avoid routing ALL the traffic but to exclude the traffic going to the control plane and the web app of Databricks. Per each Azure region that has Databricks enabled in, there are two IP ranges, one for the control plane and one for the webapp. These are unique to the region and should be excluded from the routing. The screenshot below shows the routing for a databricks in Canada Central region.

In my testing, I used Azure Firewall and I’ll list all the Network Rules and Application Rules I added to have a successful cluster creation and running sample notebook.

Documented Rules

DBFS root Blob storage IP address

Each workspace has a storage account created and managed by Databricks to act as the local file system of the clusters of this workspace. Also is used to save the notebooks. There’s a storage account per workspace in the managed resource group of the workspace. The naming convention of it is dbstorage < random alphanumeric string> Until now, Databricks doesn’t use private endpoint for these storage accounts (something for the near future may be) so the traffic destined to this storage account has to pass through the firewall and you need to use Application Rule (by using the FQDN) to allow it. I highly recommended not using something like *.blob.core.windows.net because that allows the Databricks cluster to reach any storage account on Azure anywhere in the globe which will open the door for exfilteration attacks.

Metastore, artifact Blob storage, log Blob storage, and Event Hub endpoint IP addresses

Metastore is a mysql database where the metadata about the workspace is saved . Which means if you are using IP & port combination then you need to get the IP of the URL from the docs and use port 3306. For example for Canada Central

nslookup consolidated-canadacentral-prod-metastore.mysql.database.azure.com

Name: cr2.canadacentral1-a.control.database.windows.net

Address: 52.228.35.221

Aliases: consolidated-canadacentral-prod-metastore.mysql.database.azure.com In Azure Firewall, we can use the FQDN in the network rules so there's no need to get the IP but if your firewall doesn't support that then get the IP and periodically update it. **Artifact Blob storage** both primary & secondary storage accounts (which can be the same account in some regions) are the storage account where the scripts and the binary files of Databricks are saved at.

Log Blob storage is another storage used for cluster logs

Event Hub endpoint eventhub endpoint used for shipping logs as well. This is eventhub kafka so it uses port 9093. For more details, refer to the docs

Special un-documented domains

During my testing with Azure Firewall & Databricks, I found that the docs didn’t cover all the FQDNs that are requested by my cluster. From my testing I found out these extra ones. I added what I collected about them from different sources and my decision

- Ubuntu updates Used for Ubuntu updates since Databricks is built on Ubuntu image ==> enabled *.ubuntu.com

- snap packages Package management for Ubuntu ==> enabled *.snapcraft.io

- terracotta → *.terracotta.org

- cloudflare → *.cloudflare.com

- ICMP Type 8 (Ping) to 172.217.13.164 which is an IP belongs to Google. I didn’t see any docs for it but since it’s just ping, I didn’t see an issue with that traffic. It can be a heartbeat to check if the server has connectivity with the internet. It can be due to exceptions raised by blocked URLs so the servers check connectivity.

- When accessing the quickstart notebook and read the sample data using this code

Log Blob storage is another storage used for cluster logs

Event Hub endpoint eventhub endpoint used for shipping logs as well. This is eventhub kafka so it uses port 9093. For more details, refer to the docs

Special un-documented domains

During my testing with Azure Firewall & Databricks, I found that the docs didn’t cover all the FQDNs that are requested by my cluster. From my testing I found out these extra ones. I added what I collected about them from different sources and my decision

Ubuntu updates Used for Ubuntu updates since Databricks is built on Ubuntu image ==> enabled *.ubuntu.com

snap packages Package management for Ubuntu ==> enabled *.snapcraft.io

terracotta → *.terracotta.org

cloudflare → *.cloudflare.com

ICMP Type 8 (Ping) to 172.217.13.164 which is an IP belongs to Google. I didn’t see any docs for it but since it’s just ping, I didn’t see an issue with that traffic. It can be a heartbeat to check if the server has connectivity with the internet. It can be due to exceptions raised by blocked URLs so the servers check connectivity.

When accessing the quickstart notebook and read the sample data using this code

It will throw error and in the firewall logs, you will find deny access to sts.amazonaws.com which means that the sample data still in S3 buckets. I didn’t allow this URL in this testing round but if you did, expect to have another URL or two to show up. These are the exact buckets that hosts the sample dataset. They will be * < bucket name>.s3.amazonaws.com*

- nvidia.github.io used to pull nvidia drivers. In my testing, I didn’t see any clusters that has GPUs but still the traffic to nvidia github pages was recorded so it seems it’s needed for all clusters not just GPU-equipped clusters.

- deb.nodesource.com this is a shortcut to https://github.com/nodesource/distributions which has node distribution packages

- files.pythonhosted.org This site hosts packages and documentation uploaded by authors of packages on the Python Package Index.

- UDP protocol on port 123 for Network Time Protocol ==> created a network rule for UDP protocol with destination port 123

- pypi.org for python pypi packages

- Azure Monitor In the testing we found two storage account not related to Databricks zrdfepirv2yto21prdstr02a.blob.core.windows.net zrdfepirv2yt1prdstr06a.blob.core.windows.net I’m still waiting final confirmation from the Azure monitor team but all the investigations leading to these are used by the Azure Monitor linux agent. Buy why we have a deny on Azure Monitor storage accounts when we enable the AzureMonitor service tag? There’s a trick in the AzureMonitor service tag, as per the docs AzureMonitor service tag has a dependency on the Storage tag which means to be on the safe side you need to add the storage service tag in your Azure Firewall Network rules but that’s too much to allow all the traffic to all the storage accounts in a region. In my customer’s deployments, we depended on reading the logs with denied access and add these storage accounts one by one. This way is of course more restrictive and may fail after while when these storage accounts change but it’s better for security.

I didn’t record a new video for the new setup but the one done on December 2019 still valid as a guide

References

I’ve added the NetworkRules & ApplicationRules of Azure Firewall in these gists Network Rules

"networkRuleCollections": [

{

"name": "azure-infra",

"properties": {

"priority": 120,

"action": {

"type": "Allow"

},

"rules": [

{

"name": "azure-monitor",

"protocols": [

"TCP"

],

"sourceAddresses": [

"*"

],

"destinationAddresses": [

"AzureMonitor.CanadaCentral",

"AzureMonitor.CanadaEast",

"ApplicationInsightsAvailability",

"AzureBackup",

"GuestAndHybridManagement",

"AzureMonitor"

],

"sourceIpGroups": [],

"destinationIpGroups": [],

"destinationFqdns": [],

"destinationPorts": [

"443"

]

}

]

}

},

{

"name": "Dbricks-rules",

"properties": {

"priority": 200,

"action": {

"type": "Allow"

},

"rules": [

{

"name": "NTP",

"protocols": [

"UDP"

],

"sourceAddresses": [

"*"

],

"destinationAddresses": [

"*"

],

"sourceIpGroups": [],

"destinationIpGroups": [],

"destinationFqdns": [],

"destinationPorts": [

"123"

]

},

{

"name": "ping",

"protocols": [

"ICMP"

],

"sourceAddresses": [

"*"

],

"destinationAddresses": [

"*"

],

"sourceIpGroups": [],

"destinationIpGroups": [],

"destinationFqdns": [],

"destinationPorts": [

"*"

]

},

{

"name": "metastore-mysql",

"protocols": [

"TCP"

],

"sourceAddresses": [

"*"

],

"destinationAddresses": [],

"sourceIpGroups": [],

"destinationIpGroups": [],

"destinationFqdns": [

"consolidated-canadacentral-prod-metastore.mysql.database.azure.com"

],

"destinationPorts": [

"3306"

]

},

{

"name": "eventhub",

"protocols": [

"TCP"

],

"sourceAddresses": [

"*"

],

"destinationAddresses": [],

"sourceIpGroups": [],

"destinationIpGroups": [],

"destinationFqdns": [

"prod-canadacentral-observabilityeventhubs.servicebus.windows.net"

],

"destinationPorts": [

"5671",

"5672",

"9350-9354",

"9093"

]

}

]

}

}

]

Application Rules

"applicationRuleCollections": [

{

"name": "dbfs-blob-storages",

"properties": {

"priority": 200,

"action": {

"type": "Allow"

},

"rules": [

{

"name": "ppe-dbfs",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"dbstorage**removed**.blob.core.windows.net"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "Artifact-Blob-storage-primary",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"dbartifactsprodcacentral.blob.core.windows.net"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "Artifact-Blob-storage-secondary",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"dbartifactsprodcacentral.blob.core.windows.net",

"dbartifactsprodcaeast.blob.core.windows.net"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "log-blob-storage",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"dblogprodcacentral.blob.core.windows.net"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "ubuntu-updates",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"*.ubuntu.com"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "cloudflare",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"*.cloudflare.com"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "snap-packages",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"*.snapcraft.io"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "nvidia.github.io",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"nvidia.github.io"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "deb.nodesource.com",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"deb.nodesource.com"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "www.terracotta.org",

"protocols": [

{

"protocolType": "Http",

"port": 80

},

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"terracotta.org"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "files.pythonhosted.org",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"files.pythonhosted.org"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "pypi.org",

"protocols": [

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"pypi.org"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

},

{

"name": "www.terracotta.org",

"protocols": [

{

"protocolType": "Http",

"port": 80

},

{

"protocolType": "Https",

"port": 443

}

],

"fqdnTags": [],

"targetFqdns": [

"www.terracotta.org"

],

"sourceAddresses": [

"*"

],

"sourceIpGroups": []

}

]

}

}

]